Delegation Creates Verification Debt

Delegating judgement to conversational systems does not remove responsibility. It postpones it.

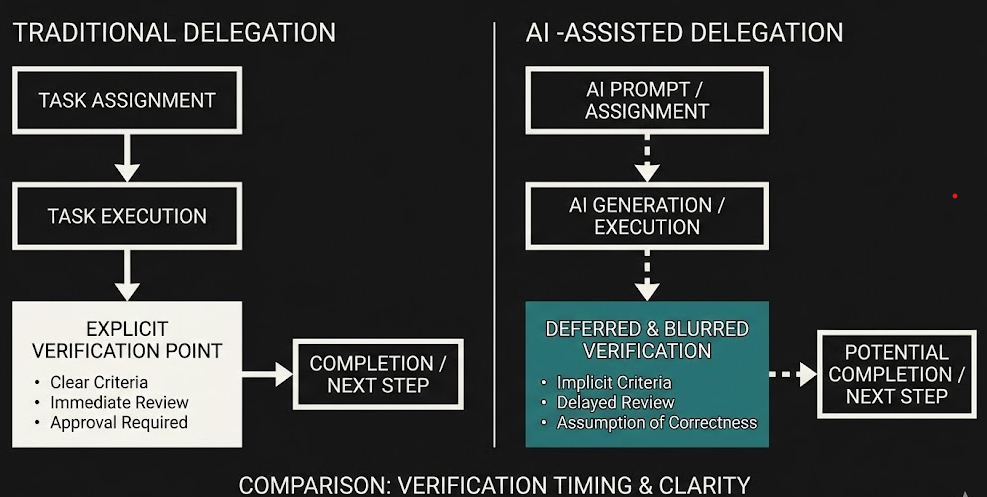

The usual defence is pragmatic. Delegation is how complex work gets done. No one recalculates actuarial tables or re-derives clinical guidelines. Tools extend capacity. This reasoning misses a crucial difference. Traditional delegation moved work to other people or to fixed instruments whose limits were visible. Conversational AI absorbs judgement while obscuring where verification is now required. The debt accumulates quietly.

Verification does not disappear. It moves.

In human systems, delegation has always created an obligation to check. The cost of that check was explicit. Time was booked. Expertise was named. Accountability sat somewhere identifiable. Conversational systems change the texture of this exchange. They return outputs that look finished, neutral, and internally consistent. The absence of visible seams invites the user to treat the delegation as complete rather than provisional.

A single decision illustrates the shift. A senior manager asks for an analysis of regulatory exposure ahead of a board discussion. The response is structured, cautious, and well phrased. The manager scans it, feels oriented, and forwards it with minimal modification. They intend to verify key points later. The meeting arrives. Time runs out. The text stands in for the analysis. The verification never occurs, yet the delegation feels justified because nothing obviously went wrong.

The debt remains unpaid.

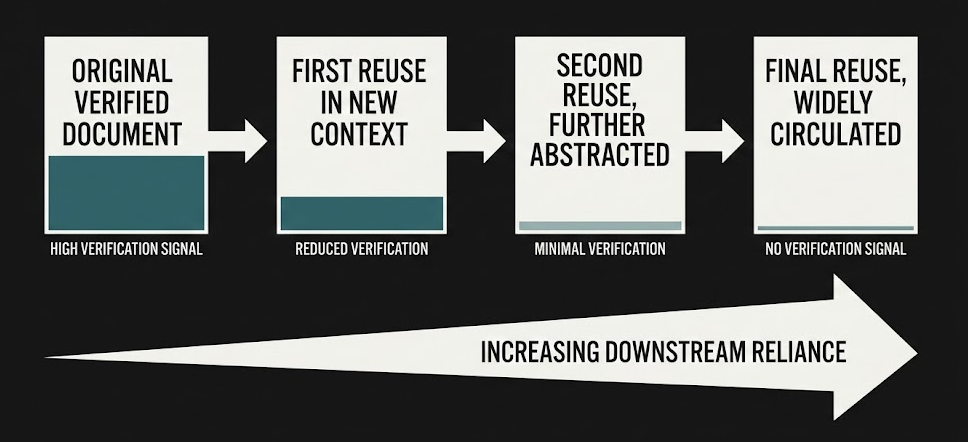

This pattern is reinforced by how work moves through organisations. A risk summary generated with assistance is copied into a briefing deck. That deck feeds into minutes. The minutes are referenced in a follow-up paper. At each step, the text gains authority while losing traceability. When questions eventually arise, the original judgement is distant. The cost of rechecking now exceeds the cost of acceptance.

Figure 1 — Delegation shifting the point of verification

What makes this dangerous is not error frequency but error timing. When verification is delayed, mistakes surface only after they have propagated. By then, correction is costly and socially awkward. People defend the artefact because they have already acted on it. The organisation treats the output as a shared assumption rather than a contestable claim.

There is also a behavioural asymmetry. Producing fluent text feels like work. Checking it feels like interruption. In environments where throughput is rewarded, the interruption loses. Over time, teams learn that nothing bad happens immediately when verification slips. The habit hardens.

A second micro-example shows the institutional version. An assessment rubric is revised using generated language to improve clarity. The new version circulates and is adopted. Months later, markers report inconsistent grading outcomes. Investigating the rubric reveals subtle shifts in criteria emphasis introduced during rewriting. No one can say when or why those shifts occurred. The delegation to rewrite language altered judgement without a deliberate decision to do so.

Figure 2 — Accumulation of verification debt through reuse

This does not imply that all delegation to conversational systems is reckless. Low-stakes drafting, exploratory summarisation, and personal sense-making often benefit. The problem arises when delegation crosses into judgement without relocating verification deliberately. In such cases, responsibility does not vanish. It becomes diffuse.

Training people to be sceptical will not resolve this alone. Scepticism is fragile under time pressure. What matters is whether systems make the location of responsibility and the need for checking unavoidable. If verification remains optional, it will be postponed. If it is postponed often enough, it will be forgotten.

Delegation always creates debt. The question is whether anyone is still keeping the ledger.